NFS allows programs on one system to access files on another system transparently by mounting the remote directory. Usually, when the server is booted, directories are made available by the exportfs command, and the daemons to handle remote access (nfsd daemons) are started. Similarly, the mounts of the remote directories and the initiation of the appropriate numbers of NFS block I/O daemons (biod daemon) to handle remote access are performed during client system boot.

Prior to AIX 4.2.1, nfsd and biod daemons were processes that required tuning the number of nfsd and biod daemons. Beginning with AIX 4.2.1, there is a single nfsd and biod daemon, each of which is multithreaded (multiple kernel threads within the process). Also, the number of threads is self-tuning in that it creates additional threads as needed.

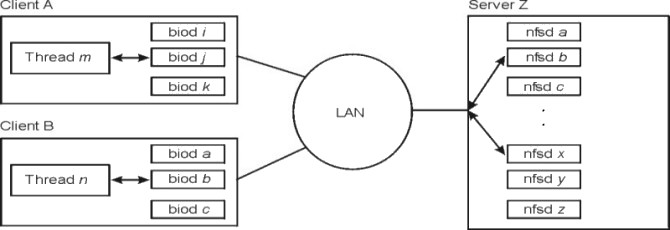

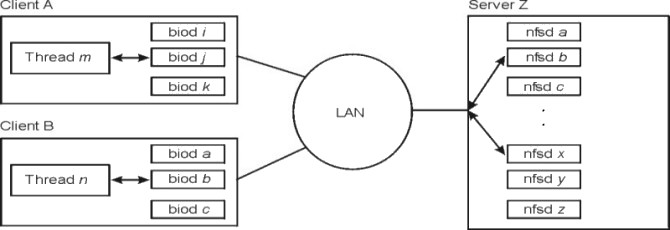

The following figure illustrates the structure of the dialog between NFS clients and a server. When a thread in a client system attempts to read or write a file in an NFS-mounted directory, the request is redirected from the usual I/O mechanism to one of the client's biod daemon. The biod daemon sends the request to the appropriate server, where it is assigned to one of the server's NFS daemons (nfsd daemon). While that request is being processed, neither the biod nor the nfsd daemon involved do any other work.

Figure 10-1. NFS Client-Server Interaction. This illustration shows two clients and one server on a network that is laid out in a typical star topology. Client A is running thread m in which data is directed to one of its biod daemons. Client B is running thread n and directing data to its biod daemons. The respective daemons send the data across the network to server Z where it is assigned to one of the server's NFS (nfsd) daemons.

|

NFS uses Remote Procedure Calls (RPC) to communicate. RPCs are built on top of the External Data Representation (XDR) protocol which transforms data to a generic format before transmitting and allowing machines with different architectures to exchange information. The RPC library is a library of procedures that allows a local (client) process to direct a remote (server) process to execute a procedure call as if the local (client) process had executed the procedure call in its own address space. Because the client and server are two separate processes, they no longer have to exist on the same physical system.

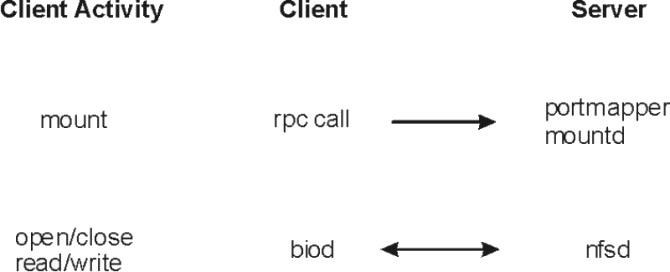

Figure 10-2. The Mount and NFS Process. This illustration is a three column table with Client Activity, Client, and Server as the three column headings. The first client activity is mount. An rpc call goes from the client to the server's portmapper mountd. The second client activity is open/close read/write. There is two-way interaction between the client's biod daemon and the server's nfsd daemon.

|

The portmap daemon, portmapper, is a network service daemon that provides clients with a standard way of looking up a port number associated with a specific program. When services on a server are requested, they register with portmap daemon as an available server. The portmap daemon then maintains a table of program-to-port pairs.

When the client initiates a request to the server, it first contacts the portmap daemon to see where the service resides. The portmap daemon listens on a well-known port so the client does not have to look for it. The portmap daemon responds to the client with the port of the service that the client is requesting. The client, upon receipt of the port number, is able to make all of its future requests directly to the application.

The mountd daemon is a server daemon that answers a client request to mount a server's exported file system or directory. The mountd daemon determines which file system is available by reading the /etc/xtab file. The mount process takes place as follows:

The client only contacts the portmap daemon on its very first mount request after a system restart. Once the client knows the port number of the mountd daemon, the client goes directly to that port number for any subsequent mount request.

The biod daemon is the block input/output daemon and is required in order to perform read-ahead and write-behind requests, as well as directory reads. The biod daemon improves NFS performance by filling or emptying the buffer cache on behalf of the NFS clients. When a user on a client system wants to read or write to a file on a server, the biod daemon sends the requests to the server. The following NFS operations are sent directly to the server from the operating system's NFS client kernel extension and do not require the use of biod daemon:

Prior to AIX 4.2.1, the default number of biod daemons is six and can be increased or decreased as necessary for performance.

The nfsd daemon is the active agent providing NFS services from the NFS server. The receipt of any one NFS protocol request from a client requires the dedicated attention of an nfsd daemon until that request is satisfied and the results of the request processing are sent back to the client. Prior to AIX 4.2.1, the default number of nfsd daemons is eight and can be increased or decreased as necessary for performance.

NFS Version 3 was introduced in AIX 4.2.1. The operating system can support both NFS Version 2 and Version 3 on the same machine.

Prior to AIX 4.2.1, UDP was the exclusive transport protocol for NFS packets. TCP was added as an alternative protocol in AIX 4.2.1. UDP works efficiently over clean or efficient networks and responsive servers. For wide area networks or for busy networks or networks with slower servers, TCP may provide better performance because its inherent flow control can minimize retransmits on the network.

NFS Version 3 can enhance performance in many ways:

Applications running on client systems may periodically write data to a file, changing the file's contents. The amount of time an application waits for its data to be written to stable storage on the server is a measurement of the write throughput of a global file system. Write throughput is therefore an important aspect of performance. All global file systems, including NFS, must ensure that data is safely written to the destination file while at the same time minimizing the impact of server latency on write throughput.

The NFS Version 3 protocol offers a better alternative to increasing write throughput by eliminating the synchronous write requirement of NFS Version 2 while retaining the benefits of close-to-open semantics. The NFS Version 3 client significantly reduces the number of write requests it makes to the server by collecting multiple requests and then writing the collective data through to the server's cache, but not necessarily to disk. Subsequently, NFS submits a commit request to the server that ensures that the server has written all the data to stable storage. This feature, referred to as safe asynchronous writes, can vastly reduce the number of disk I/O requests on the server, thus significantly improving write throughput.

The writes are considered "safe" because status information on the data is maintained, indicating whether it has been stored successfully. Therefore, if the server crashes before a commit operation, the client will know by looking at the status indication whether to resubmit a write request when the server comes back up.

NFS sequential read throughput as measured at the client is enhanced via the VMM read-ahead and caching mechanisms. Read-ahead allows file data to be transferred to the client from the NFS server in anticipation of that data being requested by an NFS client application. By the time the request for data is issued by the application, it is possible that the data resides already in the client's memory, and thus the request can be satisfied immediately. VMM caching allows re-reads of file data to occur instantaneously, assuming that the data was not paged out of client memory which would necessitate retrieving the data again from the NFS server.

In addition, CacheFS, see Cache File System (CacheFS), may be used to further enhance read throughput in environments with memory-limited clients, very large files, and/or slow network segments by adding the potential to satisfy read requests from file data residing in a local disk cache on the client.

Because read data can sometimes reside in the cache for extended periods of time in anticipation of demand, clients must check to ensure their cached data remains valid if a change is made to the file by another application. Therefore, the NFS client periodically acquires the file's attributes, which includes the time the file was last modified. Using the modification time, a client can determine whether its cached data is still valid.

Keeping attribute requests to a minimum makes the client more efficient and minimizes server load, thus increasing scalability and performance. Therefore, NFS Version 3 was 0designed to return attributes for all operations. This increases the likelihood that the attributes in the cache are up to date and thus reduces the number of separate attribute requests.

NFS Version 2 has an 8 KB maximum buffer-size limitation, which restricts the amount of NFS data that can be transferred over the network at one time. In NFS Version 3, this limitation has been relaxed. The default read/write size is 32 KB for this operating system's NFS, enabling NFS to construct and transfer larger chunks of data. This feature allows NFS to more efficiently use high bandwidth network technologies such as FDDI, 100baseT and 1000baseT Ethernet, and the SP Switch, and contributes substantially to NFS performance gains in sequential read/write performance.

A full directory listing (such as that produced by the ls -l command) requires that name and attribute information be acquired from the server for all entries in the directory listing. NFS Version 2 clients query the server separately for the file and directory names list and attribute information for all directory entries in a lookup request. With NFS Version 3, names list and attribute information is returned at one time, relieving both client and server from performing multiple tasks. However, in some environments, the NFS Version 3 readdirplus() operation might cause slower performance.

Other changes in AIX 4.2.1 (for both NFS Version 2 and Version 3) include changes to the nfsd, biod, and rpc.lockd daemons. Prior to AIX 4.2.1, nfsd and biod daemons were processes that required tuning the number of nfsd and biod daemons. Beginning with AIX 4.2.1, there is a single nfsd and biod daemon each of which is multithreaded (multiple kernel threads within the process). Also, the number of threads is self-tuning in that it creates additional threads as needed. You can, however, tune the maximum number of threads by using the nfso command and you can tune the maximum number of biod threads per mount via the biods mount option.

The rpc.lockd daemon was also changed to be multithreaded, so that many lock requests can be handled simultaneously.

Prior to AIX 4.3, the default protocol was UDP for both NFS Version 2 and NFS Version 3. With AIX 4.3 and later, TCP is now the default protocol for NFS Version 3, and the client can select different transport protocols for each mount, using the mount command options. You can use a new mount option (proto) to select TCP or UDP. For example:

# mount -o proto=tcp