The vmtune command can be used to modify the VMM parameters that control the behavior of the memory-management subsystem. Some options are available to alter the defaults for LVM and file systems; the options dealing with disk I/O are discussed in the following sections.

To determine whether the vmtune command is installed and available, run the following command:

# lslpp -lI bos.adt.samples

The executable program for the vmtune command is found in the /usr/samples/kernel directory. The vmtune command can only be executed by the root user. Changes made by this tool remain in place until the next reboot of the system. If a permanent change is needed, place an appropriate entry in the /etc/inittab file. For example:

vmtune:2:wait:/usr/samples/kernel/vmtune -P 50

The VMM sequential read-ahead feature, described in Sequential-Access Read Ahead can enhance the performance of programs that access large files sequentially.

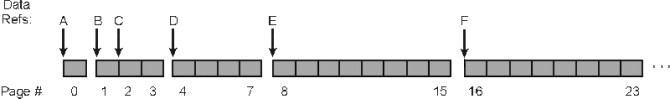

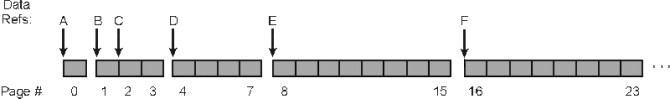

The following illustrates a typical read-ahead situation.

In this example, minpgahead is 2 and maxpgahead is 8 (the defaults). The program is processing the file sequentially. Only the data references that have significance to the read-ahead mechanism are shown, designated by A through F. The sequence of steps is:

If the program were to deviate from the sequential-access pattern and access a page of the file out of order, sequential read-ahead would be terminated. It would be resumed with minpgahead pages if the VMM detected that the program resumed sequential access.

The minpgahead and maxpgahead values can be changed by using options -r and -R in the vmtune command. If you are contemplating changing these values, keep in mind:

Write-behind involves asynchronously writing modified pages in memory to disk after reaching a threshold rather than waiting for the syncd daemon to flush the pages to disk. This is done to limit the number of dirty pages in memory, reduce system overhead, and minimize disk fragmentation. There are two types of write-behind: sequential and random.

By default, a file is partitioned into 16 K partitions or 4 pages. Each of these partitions is called a cluster. If all 4 pages of this cluster are dirty, then as soon as a page in the next partition is modified, the 4 dirty pages of this cluster are scheduled to go to disk. Without this feature, pages would remain in memory until the syncd daemon runs, which could cause I/O bottlenecks and fragmentation of the file.

The number of clusters that the VMM uses as a threshold is tunable. The default is one cluster. You can delay write-behind by increasing the numclust parameter using the vmtune -c command.

For enhanced JFS, the vmtune -j command is used to specify the number of pages to be scheduled at one time, rather than the number of clusters. The default number of pages for vmtune -j is 8.

There may be applications that perform a lot of random I/O, that is, the I/O pattern does not meet the requirements of the write-behind algorithm and thus all the pages stay resident in memory until the syncd daemon runs. If the application has modified many pages in memory, this could cause a very large number of pages to be written to disk when the syncd daemon issues a sync() call.

The write-behind feature provides a mechanism such that when the number of dirty pages in memory for a given file exceeds a defined threshold, these pages are then scheduled to be written to disk.

The administrator can tune the threshold by using the -W option of the vmtune command. The parameter to tune is maxrandwrt; the default value is 0 indicating that random write-behind is disabled. Increasing this value to 128 indicates that once 128 memory-resident pages of a file are dirty, any subsequent dirty pages are scheduled to be written to the disk. The first set of pages will be flushed after a sync() call.

For enhanced JFS vmtune options j2_nRandomCluster (-z flag) and j2_maxRandomWrite (-J flag) are used to tune random write-behind. Both options have a default of 0. The j2_maxRandomWrite option has the same function for enhanced JFS as maxrandwrt does for JFS. That is, it specifies a limit for the number of dirty pages per file that can remain in memory. The j2_nRandomCluster option specifies the number of clusters apart two consecutive writes must be in order to be considered random.

JFS file I/Os that are not sequential will accumulate in memory until certain conditions are met:

If too many pages accumulate before one of these conditions occur, then when pages do get flushed by the syncd daemon, the i-node lock is obtained and held until all dirty pages have been written to disk. During this time, threads trying to access that file will get blocked because the i-node lock is not available. Remember that the syncd daemon currently flushes all dirty pages of a file, but one file at a time. On systems with large amount of memory and large numbers of pages getting modified, high peaks of I/Os can occur when the syncd daemon flushes the pages.

A tunable option called sync_release_ilock has been added in AIX 4.3.2. The vmtune command with the -s option (value of 1 means release the i-node lock while flushing the modified pages, 0 means old behavior) allows the i-node lock to be released while dirty pages of that file are being flushed. This can result in better response time when accessing this file during a sync() call.

This blocking effect can also be minimized by increasing the frequency of syncs in the syncd daemon. Change /sbin/rc.boot where it invokes the syncd daemon. Then reboot the system for it to take effect. For the current system, kill the syncd daemon and restart it with the new seconds value.

A third way to tune this behavior is by turning on random write-behind using the vmtune command (see VMM Write-Behind).

The following vmtune parameters can be useful in tuning disk I/O:

If there are many simultaneous or large I/Os to a filesystem or if there are large sequential I/Os to a file system, it is possible that the I/Os might bottleneck at the file system level while waiting for bufstructs. The number of bufstructs per file system (known as numfsbufs) can be increased using the vmtune -b command. The value takes effect only when a file system is mounted; so if you change the value, you must first unmount and mount the file system again. The default value for numfsbufs is currently 93 bufstructs per file system.

In Enhanced JFS, the number of bufstructs is specified with the j2_nBufferPerPagerDevice parameter. The default number of bufstructs for an Enhanced JFS filesystem is currently 512. The number of bufstructs per Enhanced JFS filesystem (j2_nBufferPerPagerDevice) can be increased using the vmtune -Z command. The value takes effect only when a file system is mounted.

If an application is issuing very large raw I/Os rather than writing through the file system, the same type of bottleneck as for file systems could occur at the LVM layer. Very large I/Os combined with very fast I/O devices would be required to cause the bottleneck to be at the LVM layer. But if it does happen, a parameter called lvm_bufcnt can be increased by the vmtune -u command to provide for a larger number of "uphysio" buffers. The value takes effect immediately. The current default value is 9 "uphysio" buffers. Because the LVM currently splits I/Os into 128 K each, and because the default value of lvm_bufcnt is 9, the 9*128 K can be written at one time. If you are doing I/Os that are larger than 9*128 K, then increasing lvm_bufcnt may be advantageous.

The hd_pbuf_cnt parameter (-B) controls the number of pbufs available to the LVM device driver. Pbufs are pinned memory buffers used to hold I/O requests that are pending at the LVM layer.

In AIX Version 4, coalescing of sequential I/Os is done so that a single pbuf is used for each sequential I/O request regardless of the number of pages in that I/O. It is difficult to encounter this type of bottleneck. With random I/O, the I/Os tend to get flushed sporadically with the exception of the case when the syncd daemon runs.

The best way to determine if a pbuf bottleneck is occurring is to examine a LVM variable called hd_pendkblked. The following script can provide the value of this variable:

#!/bin/ksh # requires root authority to run # determines number of times LVM had to wait on pbufs since system boot addr=`echo "knlist hd_pendqblked" | /usr/sbin/crash 2>/dev/null |tail -1| cut -f2 -d:` value=`echo "od $addr 1 D" | /usr/sbin/crash 2>/dev/null | tail -1| cut -f2 -d:` echo "Number of waits on LVM pbufs are: $value" exit 0

Starting with AIX 4.3.3, the command vmtune -a also displays the hd_pendqblked value (see fsbufwaitcnt and psbufwaitcnt).

Specifies the number of pages that should be deleted in one chunk from RAM when a file is deleted. Changing this value may only be beneficial to real-time applications that delete files. By reducing the value of pd_npages, a real-time application can get better response time because few number of pages will be deleted before a process/thread is dispatched. The default value is the largest possible file size divided by the page size (currently 4096); if the largest possible file size is 2 GB, then pd_npages is 524288 by default. It can be changed with option -N.

When you set the v_pinshm parameter to 1 (-S 1), it causes pages in shared memory segments to be pinned by VMM, if the application, which does the shmget(), specifies SHM_PIN as part of the flags. The default value is 0. This option is only available with operating system 4.3.3 and later.

Applications can choose to have a tunable which specifies whether the application should use the SHM_PIN flag (for example, the lock_sga parameter in Oracle 8.1.5 and later). Avoid pinning too much memory, because in that case no page replacement can occur. Pinning is useful because it saves overhead in async I/O from these shared memory segments (the async I/O kernel extension are not required to pin the buffers).

Two counters were added in AIX 5.1 which are incremented whenever a bufstruct was not available and the VMM puts a thread on the VMM wait list. Use the crash command or new options (fsbufwaitcnt and psbufwaitcnt), for the vmtune -a command to examine the values of these counters. The following is an example of the output:

# vmtune -a hd_pendqblked = 305 psbufwaitcnt = 0 fsbufwaitcnt = 337

One new counter was added in AIX 5.1, that is incremented whenever a bufstruct on an Enhanced JFS filesystem is not available. Use the vmtune -a command to examine the value of this counter (xpagerbufwaitcnt). The following is an example of the output:

# vmtune -a

xpagerbufwaitcnt = 815